As part of the Affective Computing Class taught by Prof. Rosalind Picard, I explored micromovement monitoring via cameras as a way to detect affect in humans.

Interested in a speculative future world of AI generated Music, I was interested in making the ‘music’ pay attention to the affect of the listener, in this particular case the generative music algorithms paid attention to the microexpressions on the listener.

Initial Hypothesis was “Listeners find emotionally attentive generative music more engaging than static generative music?

Unlike common music listening monitoring devices like the CRDI (Continuous Response Digital Interfaces) camera micro movement detection allowed to measure high dimensional affect in a time synchronous manner.

A study was performed with 15 participants to detect various categories of micromovements while music listening, with a pre-test, listening phase and post-test survey method.

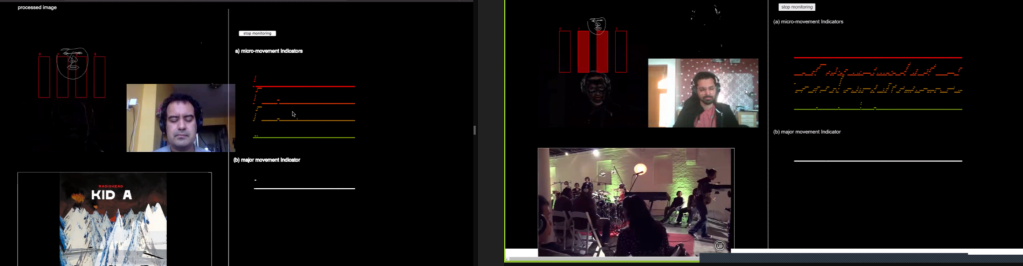

Here is a video demonstrating the automated generative music algorithm choosing tracks and elements of the song based on the intensities of micromovements of the listener.

“The emotions - love, mirth, the heroic, wonder, tranquility, fear, anger, sorrow, disgust - are in the audience.” – John Cage (1961)