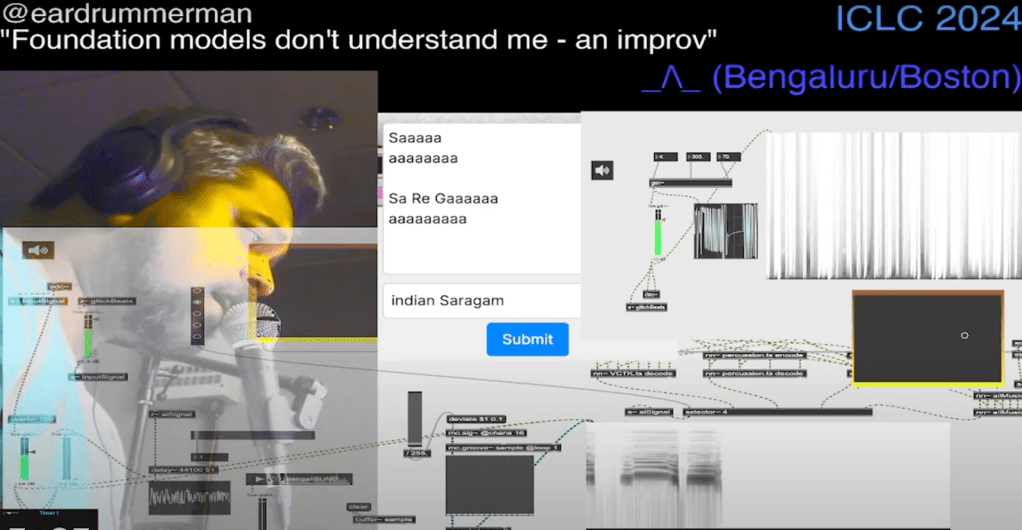

FMDUM – Foundation Models don’t understand me –

is a live improvised performance of Indian musical ideas reflected through

- few small (RAVE – realtime audio variational autoencoders) models trained on traditional percussion instruments and indian vocal excerpts.

- foundation models for lyric conditioned music generation (like SUNO)

- foundation models for genre conditioned music generation (like Stable Audio)

This performance is an ongoing workshop of ideas for showcasing the following key ideas

- Shortcomings of foundation models to generate folk and ethnic music from an indian music lens

- Possibilities of live performance when a human musician reacts, interacts and remixes AI generated music showcasing the shifting value of creation in the age of synthetic media.

This performance is an excerpt from a short demo performed online as a virtual part of the ICLC live coding conference in June 2024.

(more coming soon…)

here is a sneak peak: